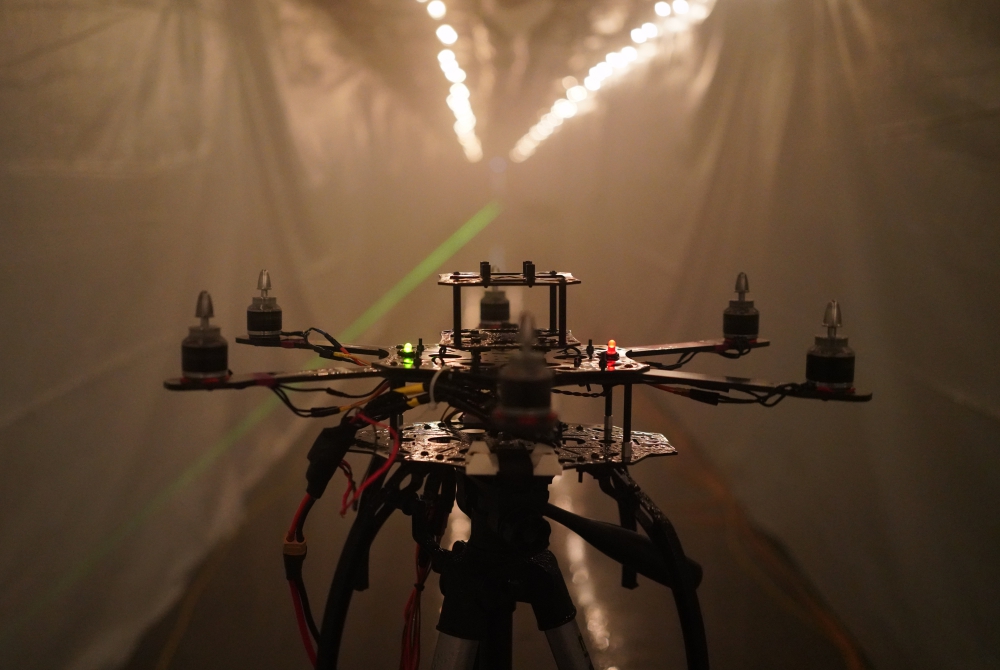

The frame of an unmanned aerial vehicle is installed at the end of a 180-foot-long chamber filled with fog at Sandia National Labs in Albuquerque, New Mexico

While the sun beat down on the New Mexico desert, inside, a dense fog hung in the air. In a special facility outside Albuquerque, a team of NASA researchers was working with the kind of fog that’s so thick you can’t see three feet in front of you.

The ability to perceive things through the fog was the reason for their visit – but not our human ability. Rather, the engineers were testing sensors likely to be used on future air vehicles such as urban air taxis. There won’t be a human pilot on board these small aircraft, and they’ll need new ways of seeing and sensing the environment to help them take off, fly, and land safely.

Instruments such as optical and infrared cameras, radar, and lidar devices will become their “eyes.” Fog presents a major challenge to those sensors, and how well today’s technology can take it in stride is an important question. The answer will drive the next phase of their development and help these aircraft fly autonomously.

For their study, the team from NASA’s Ames Research Center in California’s Silicon Valley needed a special facility that could make fog on demand. The fog chamber at Sandia National Laboratories in Albuquerque, New Mexico, can repeatedly produce, with scientific precision, a fog with the specific density needed.

Fog: Common, Calm, and Extreme

Fog is an extreme environment for perception technology, but it’s weather that commuters and others will still want to fly in. It’s common enough and calm enough: you don’t have turbulence or lightning with fog. Still, our high-tech “eyes” can’t yet see through it the way they’ll need to.

NASA research engineer Nick Cramer walks into fog produced at a specific density inside a special facility at Sandia National Labs. Cramer’s team tested the capabilities of current sensor technologies to perceive obstacles – including people – through the fog. The green laser light is part of a system used to measure the fog’s density

The signals emitted by a lidar device scanning an area for a safe landing spot might reflect off the water droplets in fog, instead of the objects they’re meant to detect. Each of the sensors that might be used on unpiloted passenger aircraft in the future is impacted differently by fog, and designers need to know how.

“Each sensor has its strengths and weaknesses, and they’re affected by fog to different degrees,” said Nick Cramer, a research engineer at Ames. “We don’t know which will end up on these vehicles, so we tested a suite of sensors in the chamber to quantify their pros and cons.”

Vision Tests Through the Fog

Stretching for 180 feet, Sandia’s fog chamber resembles a long concrete corridor lined with plastic sheeting that traps the fog in the test area. A string of incandescent lights along the ceiling looks like something you’d see on a restaurant’s patio, but it provides important consistent lighting across the space.

From the control room, Cramer and his colleagues from the Revolutionary Aviation Mobility group, part of NASA’s Transformational Tools and Technologies project, watched as 64 sprinklers in the ceiling came alive.

“The sprayers release a mixture of water and salt to create fog made of particles of a larger size,” said Jeremy Wright, an optical engineer at Sandia. “It’s actually difficult to get just right. Depending on the conditions, the water droplets want to either condense more water out of the air and grow or give water back to the air as humidity.”

At one end of the chamber, the frame of an unmanned aerial vehicle, commonly known as a drone, stood bolted to a stand. The UAV was a test target for the collection of sensors installed at the opposite end. The researchers measured how well they could detect the UAV – or its warm motors, in the case of an infrared camera – from different distances through different levels of fog.

Data for the Next Generation of Sensors

These tests to study how far and how well today’s technology can see in foggy weather will help answer how safe an aircraft relying on them would be. NASA will release the data for use by companies and researchers working to develop information processing techniques and improve sensors for Advanced Air Mobility vehicles. They need this kind of data to build accurate computer simulations, discover new challenges, and validate their technology for flight.

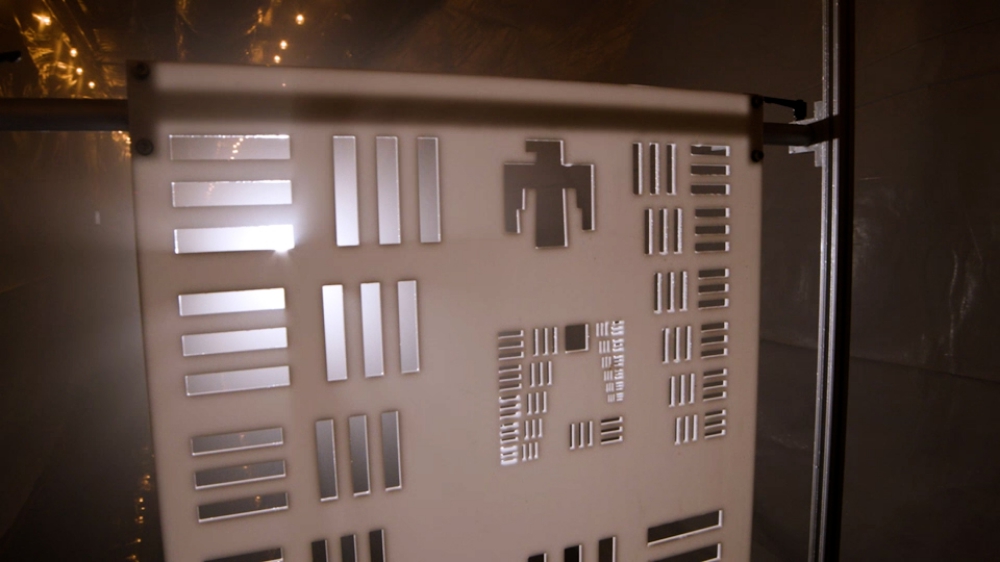

This panel with its rectangular gaps of different sizes works like an eye chart for cameras. During tests with visual and infrared cameras and a lidar scanner, the panel helped NASA researchers determine how much detail each sensor could make out through the fog

NASA, meanwhile, will continue its fundamental research to understand how best to use these aircraft sensors. With different strengths and weaknesses, optical, radar, lidar, and other systems are complementary. A fusion of different sensors combined in the smartest ways will help make the market opened by Advanced Air Mobility a safe, productive reality.

Photos: Sandia National Labs

Source: Press Release