Conflict, climate issues, forced displacement, and acute food insecurities are creating a growing need for new aerial autonomy solutions that can protect human life and assure the delivery of essential supplies in dangerous environments. Over the next ten to fifteen years, we can expect to see quantum leaps in understanding of how these new solutions can be used, the infrastructures that will enable them, the regulations that will safeguard us, and the drivers that will accelerate adoption.

In this article I will explore one of the most complicated aspects of uncrewed aerial flight that must be addressed before the technology can be safely rolled out and embraced – assessing safe emergency landing spots when operating beyond the line of sight.

Why emergency landing site evaluation is a data problem

At its very core, emergency landing site evaluation is a data problem. It is about understanding the world around the Uncrewed Aircraft System and reasoning over this information to make a series of informed decisions. It must first create an accurate world model by fusing datasets from different sources and within different processes during a mission, such as planning, perception, segmentation, fusion, and landing.

During the planning stage, before an Uncrewed Aircraft System has even taken flight, it must have access to map information, satellite data, weather reports or mission specifics like no-fly zones. Each of these helps to paint a picture of the environment an Uncrewed Aircraft System will fly through, how it can find natural efficiencies in the air, and ultimately where it might land in an emergency. Compared to observations the aircraft can make with its own sensors, these world models may be low resolution and possibly inaccurate, so they have to be updated and reassessed once up in the air.

Perceiving the world as it really is

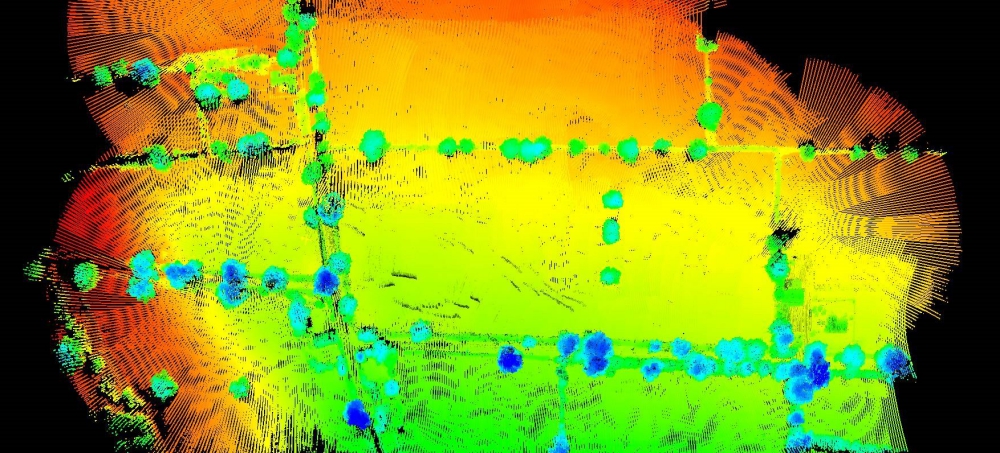

During flight Uncrewed Aircraft Systems must rely on a combination of different sensor types to accurately perceive the world around it. High resolution cameras act much like the human eye, creating a visual representation of the world. LiDAR provides shape, depth, and the ability to accurately measure distances with pulsing lasers. Radar provides a longer range, data-rich picture of surroundings in all conditions including low visibility.

Sensor types like these offer the prospect of performance beyond the sum of their parts. But only in recent times have technological advancements made it possible for small, low mass Uncrewed Aircraft Systems to fly with several combined. This is a crucial milestone for the industry, one that raises the bar on safety while unlocking a vast array of new commercial opportunities (which I will explain at the end of this piece).

The segmentation challenge

The next step for Uncrewed Aircraft Systems is to go beyond sensing raw data, and actually understand the environment, its elements, and how their spatial configuration might be changing, so as to derive actionable information.

Segmentation is the first step in this process, meaning a partitioning of data, followed by classification of scene elements. In the case of camera data, we talk about semantic segmentation if there is a category/class label for every pixel in the image, where each class corresponds to an object of a certain type, for example a power line pole, tree, vehicle, etc.

Panoptic segmentation, however, assigns different labels to the same type of object if these two objects are distinct, enabling different instances of the same type of object to be tracked. For example, two people would be assigned different class labels. These labels could be their names.

Semantic segmentation is an important component in visual assessment of terrain, supporting manoeuvres such as autonomous emergency landings, where it can provide exact location and extent of suitable areas such as runways, or areas covered by low-growth vegetation, while rejecting unsuitable areas such as water, urban structures, or major roads.

The biggest challenge in implementing a highly accurate segmentation system lies in gathering a sufficiently comprehensive set of data to permit optimisation of a machine learning model for segmentation. For autonomous driving applications these datasets exist and are constantly improved by the academic community as well as industrial efforts. With time we will have the same for Uncrewed Aircraft Systems.

The fusion of human and machine intelligence

In the fusion phase, an Uncrewed Aircraft System is required to combine segmented learnings (applied from the raw sensor data) with external datasets (maps, weather reports, vehicle data etc.) to update its model of the world and ultimately determine a safe landing spot. This process is essentially the fusion of human and machine intelligence.

In simple scenarios, data sets will agree with each other. For example, areas may be marked as unsafe to land by human input and machine reading. In more complex instances where datasets disagree AI may be required to correct a previous human input to make a safe, informed decision.

This natural fusion of information suggests a “staircase” of steps toward full autonomy. To reach full autonomy, the industry must quickly move from a remotely piloted aircraft, to autopilot, to AI analysis of landing sites pre-mission with human approval, to in-flight site ID with a human in the loop for the decision to land, up to fully automated decision making. Naturally, different operational domains and requirements will permit differing levels of autonomy.

Looking into the future

Uncrewed Aircraft Systems have come a long way. New technologies including AI, automation, IoT, 5G, and big data have all realised a new era of advanced air mobility solutions that will connect the skies and unlock previously unattainable possibilities. Not all problems have been solved in their entirety, far from it, but autonomous operations are becoming increasingly commonplace across the globe.

Images supplied by Mapix Technologies Ltd.About the AuthorIan Foster is Head of Engineering, Animal Dynamics. He leads the research, design and validation teams responsible for delivering STORK STM, an uncrewed autonomous heavy-lift parafoil vehicle, that will protect human life in dangerous environments.