A system to allow air- and ground-based robot vehicles to work together, without GPS signals or expensive sensor devices, is being developed by a team at Yonsei University in Korea. This could be used by unmanned vehicle teams to explore environments, ranging from farms here on earth to the surfaces of other worlds.

A system to allow air- and ground-based robot vehicles to work together, without GPS signals or expensive sensor devices, is being developed by a team at Yonsei University in Korea. This could be used by unmanned vehicle teams to explore environments, ranging from farms here on earth to the surfaces of other worlds.

No man’s land

Unmanned ground vehicles (UGVs) are already used for a number of “3D jobs” (dangerous, dirty or difficult), including bomb disposal and exploring the surface of Mars, and the range of applications for UGVs is expanding to include reconnaissance, transport, border control, disaster area exploration and agriculture.

UGV operation can be impeded by a reliance on line-of-sight sensing, as their on-board sensors cannot see what is beyond obstacles around them. This can significantly slow down exploration applications. Unmanned aerial vehicles (UAVs), on the other hand, can quickly obtain an overview of large areas, but cannot match UGVs for endurance, load carrying or up-close investigation of the surface, such as collecting soil samples.

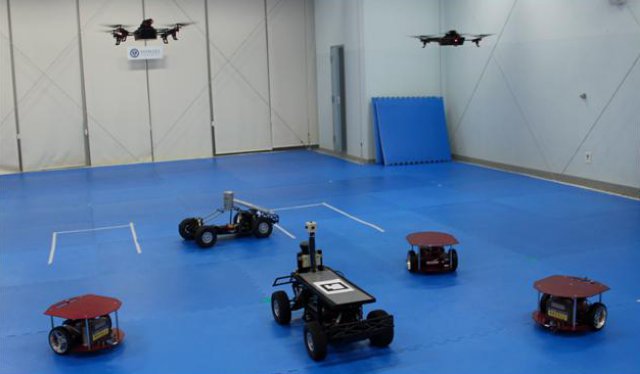

There are clear advantages to combining the use of both types of vehicle in a UGV/UAV cooperative system, an example of a heterogeneous multi-agent system. Creating such a system requires solutions to three main challenges: sensor technology to robustly detect and identify all the agents (UGVs and UAVs); precise relative positioning between the agents even where GPS is not available or reliable; and efficient communication between the agents.

For UGV path planning, one of the most important things UAVs can provide is an obstacle map of the area of operation. Previous UAV approaches to obtaining depth information of ground objects have used relatively expensive sensors such as LIDAR, RADAR, and SONAR, but the work reported in this issue of Electronics Letters uses much less expensive visual sensors, i.e. cameras. It also uses those same cameras to address the identification, positioning and GPS reliance challenges.

Eyes in the sky

The system uses stereo-vision depth sensing to provide the obstacle map, and other image processing techniques to identify and track all the agents in the system, relative to each other and the environment, so GPS information is not needed.

Stereo-vision-based depth sensing is of course well known, but its performance degrades if the distance from the observed objects becomes much larger than the separation of the cameras, and when the cameras are both mounted on the same UAV, that distance limit is obviously very small. In the work by the team from Yonsei’s School of Integrated Technology and the Yonsei Institute of Convergence Technology, this limitation is addressed by using single cameras on two UAVs, providing much greater separation, and control of that separation.

The initial motivation of the work was polar exploration, but there are many applications that could benefit from the approach, as team member Prof. Jiwon Seo explained: “We are also working on precise farming applications according to a research request from a Korean company. The role of UAVs in this case is not just limited to obstacle detection. UAVs equipped with multi-spectral imaging sensors can collect useful information on crops over a large farming area. Based on this information, unmanned tractors could operate more efficiently. As a longer-term development, the system can be applied to emergency patient transport and military applications.”

Cooperative future

While the team is now working to demonstrate the system outside the laboratory environment, the control, image processing and hardware developments made in developing the prototype system are already benefitting other work at Yonsei. An example of this is the ‘D-board’, which is the name the team have given to the small, lightweight, low-power sensor/processor board they developed to handle control and communication between the agents in the system. Within a 25 × 25 mm area, it incorporates a microprocessor, motor drivers, necessary sensors such as an accelerometer, a gyroscope, and a barometer, and Bluetooth communication capability. Seo says that it has already proved useful for other applications, including “intelligent robots, IoT devices, and media art applications”.

This other work includes the team’s wider interests in heterogeneous multi-agent systems, and UGV/UAV cooperative systems. The Yonsei researchers believe there are many other potential benefits to be found in using such systems over single agent and homogeneous multi-agent systems, as the different agents, with different strengths and weaknesses, can complement each other. “The multi-UAV stereo-vision system is just a single example of potential collaborations between UGVs and UAVs,” said Seo. “We think that it is a quite natural path for robotics research to move in this direction over the next decade. We expect many novel real-world applications, beyond the polar exploration robots and precise farming that we are currently working on, which can expand the horizon of robotics and provide real benefits to our society.”

Source: Phys Org